1. Introduction

In the previous post we looked at using a single BGP AS to scale the enterprise beyond what a single IGP can handle. In this post I’ll cover the book’s second design, based around using a separate AS to contain each regional IGP network.

2. External BGP Core Architecture

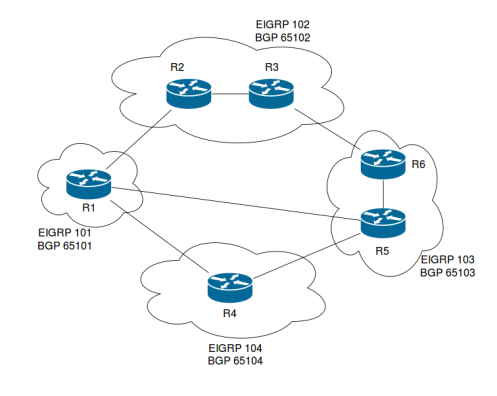

This is the diagram presented to you in the book:

Using this design we maintain some of the benefits of the iBGP design from the previous post, but also create more administrative separation between the different regions. If different teams are maintaining the networks in each region, having each region be its own AS offers advantages over having a single AS; changes in one region are less likely to impact other regions.

A problem with this design is that routing decisions are now tied to the eBGP path selection process. With an iBGP core, the IGP metric is used as the default tie breaker in the best path selection process, providing some visibility into the underlying network. This is now gone since we’ve split the network into separate ASes, each with their own IGP process. Instead, we have to rely on AS_PATH, MED and neighbor router-id, etc. This doesn’t necessarily have to be a problem if you don’t care what link you use to reach the other ASes. However, if the shortest AS_PATH uses a link that you do not want to use, you would have to modify the BGP attributes in order to change the path selection. This introduces some extra complexity.

3. Link And Device Failures

The book focuses on eBGP link failures since failures within each AS are handled by that region’s IGP. In the iBGP only design, we learned that the core IGP could route around link failures, with the result that the BGP network could reconvergence as fast as the IGP. In this topology, that is not possible, and instead we have to rely on BGP’s own slower reconvergence mechanisms.

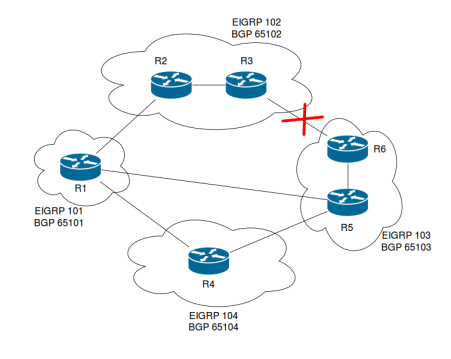

The first step when a link fails is to make BGP detect this failure. That of course sounds self-evident, but the speed at which the failure is detected greatly impacts the total time it takes to install an alternate path. Let’s assume that the link between R3 and R5 fails:

If R3 and R5 are directly connected, the bgp fast-external failover feature will tear down the BGP session immediately (unless fast failover is manually disabled). However, if some intermediary device (e.g. a L2 switch) is preventing the low level protocols from detecting a loss of signal, the BGP session on one, or both, sides will stay up until the holdtime expires. With the default timers, this would be an unacceptably long 3 minute wait. To improve this you would have to decrease the holdtime and, consequently, send keepalives more often. Since this book came out in 2004, it doesn’t mention a certain protcol that was designed to solve this problem.

Once the link failure has been detected and the BGP session is torn down, the best path selection is run using a BGP RIB that doesn’t include the prefixes received from the now dead neighbor. When that process is complete, neighbors that are still alive are updated with new information using update messages. This could be both new information and withdrawals.

Finally, the author points out that this topology is not ideal for implementing certain types of routing policy. For example, if you want a certain AS to not be able to reach another AS, you cannot simply remove those prefixes from a particular AS. This is because all ASes could act as transit for other ASes. Instead, if the goal is to prevent connectivity between ASes, you would have to filter inbound traffic reaching the BGP routers.

In the next post we’ll cover the book’s final design that utilizes both iBGP and eBGP in a hub and spoke topology.