1. Introduction

In the previous post we looked at the difference between a point to point and a multiaccess interface. We came to the conclusion that multiaccess interfaces like Ethernet and Frame Relay require mapping between IP addresses and link layer addresses in order to create frames. This binding is then used to direct the outgoing frame to the correct remote interface on the link. We then briefly talked about mGRE and how it has a conceptually similar addressing problem. In this post we’ll explore how this problem is solved using the Next Hop Resolution protocol (NHRP).

2. Static NHRP Mapping

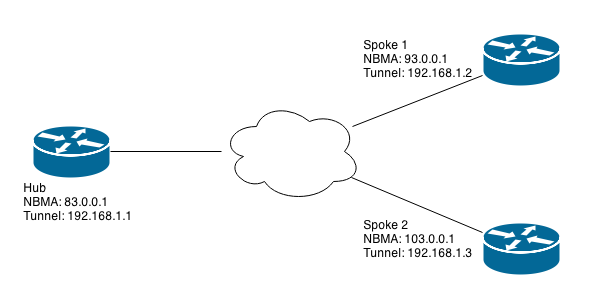

The simplest way to solve the mapping problem between the NBMA address (the “outside” address) and the tunnel IP address is to use static mappings. This is normally not done because it kind of removes the dynamic part of DMVPN. I’m demonstrating it here because it very clearly illustrates what we are trying to do. Let’s say that you have this very simple topology:

The public addresses (NBMA in NHRP speak) is 83.0.0.1 on the hub and 93.0.0.1/103.0.0.1 on the spokes. The network 192.168.1.0/24 is used inside the tunnel. Each spoke has a normal point to point GRE tunnel pointing to the hub, but the hub is using mGRE to decrease the amount of required configuration and make things more administratively palpable.

The hub currently has the following configuration:

!

int Tunnel0

192.168.1.1 255.255.255.0 tunnel source 83.0.0.1

tunnel mode gre multipoint

!

A spoke:

!

interface Tunnel0

ip address 192.168.1.3 255.255.255.0

tunnel source 93.0.0.1

tunnel destination 83.0.0.1

!

We don’t have reachability at this point because the hub has no way of knowing what destination IP address it should put in the outer header when it encapsulates packets destined to the spoke. We know that it should be 93.0.0.1, but that doesn’t help our hub. Since we know that we need to use the NBMA address 93.0.0.1 to reach 192.168.1.3 we could explicitly configure this on the hub like this:

!

int Tunnel0

192.168.1.1 255.255.255.0

tunnel source 83.0.0.1

tunnel mode gre multipoint

ip nhrp map 192.168.1.3 93.0.0.1

ip nhrp network-id 1

!

The hub is now able to encapsulate packets leaving the router through the tunnel interface to 192.168.1.3 with the correct public IP address. Note that the locally significant nhrp network-id must also be configured. It’s conceptually similar to the OSPF process ID. You could think of this configuration as a static ARP entry, and it’s obviously not a scalable solution since every single spoke would require its own individual entry (and an additional static multicast entry if you want to run a routing protocol). Instead, we want to deploy a more dynamic solution to the problem.

3. Phase 1 DMVPN

Dynamic NHRP mappings use a server/client model where the hub acts as a server and the spokes as clients. Each spoke registers with the hub and this registration sends the tunnel IP and NBMA IP mapping to the hub. Because of this dynamic registration, initiated by the spoke, we can remove the previously configured static mapping entries on the hub.

The important new configuration step to enable this registration is to assign the hub as the server on every spoke:

!

interface Tunnel0

ip address 192.168.1.3 255.255.255.0

ip nhrp network-id 1

ip nhrp nhs 192.168.1.1

tunnel source 65.0.0.1

tunnel destination 83.0.0.1

!

By using the NHRP registration feature we make it much easier to add a new spoke to the network because we no longer have to make any changes to the hub. Note that we’re still using point to point GRE tunnels on the spokes which means that all spoke to spoke communication must go via the hub. This is referred to as “phase 1” DMVPN. There are probably use cases for forcing all traffic to transit the hub, but it’s not the main selling point of DMVPN; we typically want to allow dynamic spoke to spoke tunnels to be established in order to bypass the hub completely in the data plane.

4. Phase 2 DMVPN

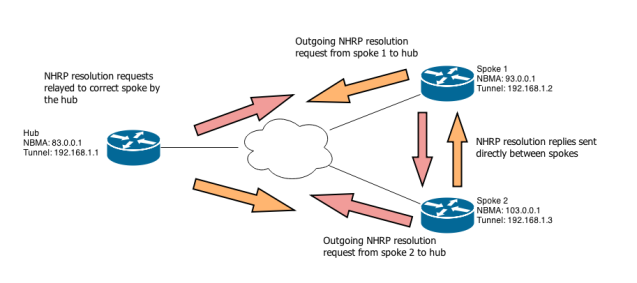

To allow the dynamic spoke to spoke tunnels to form we need to change the spokes to multipoint tunnels. As we do that, we need to make sure that we also add a static entry for the hub because without that entry, the NHRP registration cannot be sent. The question then becomes, how does a spoke learn the NHRP mapping of another spoke? A system of requests and replies are used where a spoke sends a request for a particular spoke that it wants to send traffic to. It then gets a reply from the spoke in question. Packet captures in IOS 15.2 reveal the following about this process:

- Spoke 1 forwards a packet with a next hop that is another spoke, spoke 2. There is no NHRP map entry for this spoke so an NHRP resolution request is sent to the hub.

- The request from spoke 1 contains the tunnel IP address of the spoke 2. The hub relays the request to spoke 2.

- Spoke 2 receives the request, adds its own address mapping to it and sends it as an NHRP reply directly to spoke 1.

- Spoke 2 then sends its own request to the hub that relays it to spoke 1.

- Spoke 1 receives the request from spoke 2 via the hub and replies by adding its own mapping to the packet and sending it directly to spoke 2

- Technically, the requests themselves provide enough information to build a spoke to spoke tunnel but the replies accomplish two things. They acknowledge to the other spoke that the request was received and also verify that spoke to spoke NBMA reachability exist.

The problem with this way of doing things is that each spoke must have a complete routing table where the prefixes that belong to each spoke must be present with a next hop that matches that spoke’s tunnel IP address. This has certain negative implications. First of all, you can’t summarize. Typically in a hub and spoke network, each spoke only needs to carry a default route to the hub, but due to the requirement of having the spokes’ next hops in the routing table, this is not possible. This inability to summarize could potentially put strain on the control plane and make the DMVPN network unable to scale above a certain size unless you buy bigger routers.

It also has implications for your routing protocols, and especially when it comes to OSPF. The network type must be broadcast (or non-broadcast) because the point to multipoint network type modifies the next hop of routes when they are sent from spoke to hub to spoke. Because the network type is broadcast, you are limited to two hubs with one being the DR and the other the BDR. If you use EIGRP you must disable next hop self on the hub (and split horizon). There are also issues with building hierarchical DMVPN networks where a hub is a spoke to another hub. DMVPN phase 3 was introduced to fix these issues. Phase 3 will be covered in the next post.