1. Introduction

Fragmentation is a very serious thing, and even more so when using IPsec due to the severe performance degradation it can lead to. In this post we’ll first review what fragmentation is and why it occurs. This is followed by discussion on maximum segment size, tunnels, and some potential solutions that you can deploy.

2. Background on Fragmentation

An IPv4 packet can have a maximum payload size of 16 bits, allowing you create packets that are around 65 kilobytes. If we assume that there is a certain amount of overhead involved in the processing of every packet, using larger packets should decrease this total processing burden for a set amount of data. For example sending one 65 kB packet instead of a hundred 650 byte ones. There are also potential drawbacks, like increased delay if a low bandwidth link has to transfer a large packet before the receiver is able to process its data. Another potential problem is that an error in a packet results in it having to be resent, and if that packet is larger, it means that this retransmission is more costly.

Assuming that we have a high bandwidth, error free link, why don’t we see 16 bit IPv4 packets? It has to do with something called the maximum transmission unit (MTU), a feature of link layer technologies like Ethernet. While the IP packet is a software construct that could theoretically be of any size, when packets need to be sent out a link, hardware limitations are imposed. Classic Ethernet has a maximum transmission unit of 1500 bytes. If you attempt to send a packet larger than this over the link, it will be dropped.

Knowing that IP packets can be very large but that the link layer has hardware limitations that prevent the transmission of packets above a certain size, how do we ensure that we can still deliver packets that are “too big”? The answer to this problem is called fragmentation. While the ideal situation is that each packet fits into one frame, it’s considered better to separate the larger packet into smaller ones rather than simply dropping all packets that exceed the MTU.

The entire fragmentation issue seems kind of dumb when you look at it today when almost everyone uses Ethernet. At first glance it seems like you could have avoided the problem by simply saying that a host is not allowed to send packets that are too close to Ethernet’s MTU (say max 1300 bytes), and then called it a day. There are a few problems with that view. First of all, Ethernet used to be just another link layer technology and not nearly as ubiquitous as it is now; there are other technologies with both smaller and larger MTUs. If fragmentation didn’t exist and an Ethernet based host sent our theoretical maximum sized frame of 1300 bytes, it wouldn’t be able to reach a host connected to a different underlying technology with a smaller MTU.

Secondly, a fundamental idea of the TCP/IP stack and networking in general is that we should separate functionality into layers as much as possible, and if the higher layer protocols were forced to craft packets of a certain size regardless of what link they were attached to, it would be more difficult to change that behaviour if a new layer 2 technology with larger (or smaller) MTU was deployed, e.g. Ethernet with jumbo frames.

A third concern would be that if hosts were creating packets of a fixed maximum size without awareness of the actual MTU, you could run into issues where the packet would get too big in transit due to additional encapsulation.

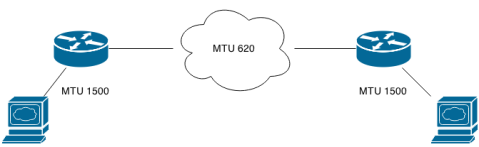

Instead, a host is able to create frames of all sizes from very small up to the MTU of the link it’s connected to. It should also be able to receive frames up to the maximum MTU. A classic scenario where this could cause fragmentation is this:

The hosts are connected to Ethernet that has an MTU of 1500 and the routers have one Ethernet interface and one unknown interface with an MTU of 620. The potential for fragmentation is there due to the changes in MTU across the path.

IPv4 and IPv6 handle fragmentation in fundamentally different ways. Using IPv4, a router fragments a packet of a size that exceeds its outgoing interface. In our diagram above, if a host sends 800 byte packet to one of the routers, the router will fragment it before sending the, now two, packets across the MTU 620 link. In IPv6, a router isn’t allowed to fragment packets, instead packets that are too big are dropped, followed by an ICMP error message being sent back to the host. From this point on, the discussion applies the IPv4.

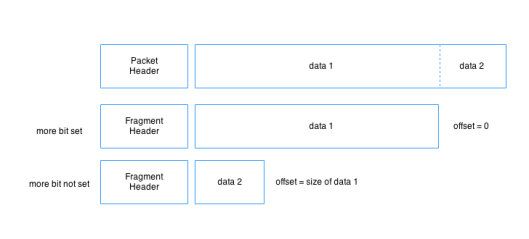

When an IPv4 router fragments a packet it segments the packet’s data and attaches copies of the old header with certain bits set in the header’s flags field. One bit is set to indicate that the packet is a fragment, and another one is set if there are more fragments following the current one. The offset field is used to indicate where in the original packet the fragment belongs, with the first packet having offset 0, and the next having one an offset that’s equal to the first fragment’s size.

Each fragment is sized according to the MTU of the link that forced the fragmentation in the first place. In other words, in our example with the 800 byte packet over the 620 link, the first fragment will have a size of 620 and the second one 180 + the size of a new header. This isn’t exactly true because the offsets must be stored as multiples of 8. For example if the data size is 80, the offset field will say 10 in order to save space in the header. The idea is to make each fragment as large possible while taking this multiples of 8 rules into account.

In IPv6, fragmentation is indicated by a new fragmentation extension header that contains largely the same information as an IPv4 fragment, like a bit indicating more fragments and an offset field. It also has an identification field that has a unique ID that is shared by all fragmentation headers originating from the same packet.

Once a packet has been fragmented, it’s not reassembled until it reaches its destination. The destination host uses the offset to order the fragments correctly, the more bit to know which fragments is the last one in the set. The identification field is used to know which fragments belong together. A lost fragment means that the packet can’t be reassembled and once the reassembly timer has timed out, the other fragments must be discarded and the entire packet resent by the source. Reassembly by a host isn’t necessarily catastrophic since that host typically has the necessary processing power available to do so in a timely manner, but as we’ll see later, when tunnels are involved a router may have to do the reassembly. When a router reassembles fragments, throughput can dramatically decrease.

3. Maximum Segment Size

So far we’ve learned that fragmentation might occur if there are changes in the MTU across the path between two hosts. Specifically, if the MTU decreases somewhere, the router connected to that lower MTU link could be forced to fragment packets. That explanation glosses over what happens on the host itself; why is the host sending a packet of a certain size? The IP process on the host gets application level data delivered via a transport protocol like TCP or UDP. This data, often called a “segment”, carries a TCP or UDP header and is then encapsulated with an IP header by the IP process. If the resulting packet is too big for the MTU of the link that the host is connected to, it’s fragmented before being sent. The receiver then has to reassemble it. Ideally you don’t want to fragment on the host already since it causes issues like having to resend all the fragment if one is lost, but some signalling mechanism is required in order to tell the transport layer what the appropriate segment size is. Once the transport layer understand this (TCP, specifically) it should only deliver segments to the IP process that result in a packet of size that is equal to or smaller than the MTU. This mechanism is called the TCP Maximum Segment Size.

When a TCP session is being negotiated (you know, the three-way handshake), the SYN contains an option field where the host can specify what maximum segment size (MSS) it wants to use. It derives this number from the MTU of the link it’s connected to, subtracting 40 bytes to account for the IP and TCP headers. For example, if the MTU is 1500, the MSS will be 1460. The host with the lowest suggested MSS will dictate what the MSS for the session will be. By adhering to the MSS, TCP will only deliver segments to the IP process that result in packets small enough to fit the link without fragmentation, solving the problem of fragmentation on the host itself. UDP doesn’t have a similar mechanism and instead, what’s usually done is limiting the size of UDP segments in the application itself to a size that is guaranteed to fit any MTU.

This MSS negotiation by itself is insufficient to solve fragmentation because while the hosts no longer fragment, you still have the issue of path MTU to contend with. The host can only look at its local link when setting the MSS option in the SYN; there could still be a lower MTU further down the path.

4. Path MTU Discovery

Path MTU Discovery (PMTUD) is designed to solve the problem of fragmentation by a router in the path between two hosts. Because it relies on MSS adjustment, it’s only supported by TCP. It works by utilizing the DF-bit (“don’t fragment”) in the IP header. If this bit is set, a packet is dropped instead of being fragmented and as the router drops the packet, an ICMP unreachable message is sent to the source of the packet indicating that it was dropped due to exceeding the MTU. The ICMP message also has a “next hop MTU” field where the MTU of the link that caused the packet to drop is inserted. This information is stored in a cache on the host and used to adjust the MSS on future traffic to the same destination. If the next hop MTU field isn’t used, the host uses an iterative process to reach an appropriate MTU. PMTUD is used continuously on all packets since the path may change dynamically, and it’s a unidirectional process; traffic in one direction might send packets that are too small to even trigger it, or routing is asymmetrical making MTU only relevant in one of the directions between the two hosts.

The problem with PMTUD is that it’s not 100% reliable. The most common problem is that some intermediary device blocks the ICMP unreachable message; e.g a firewall. Each ICMP message has a type that gives some information about the error, and several codes that are used to give further details. In our case the type is 3 for “unreachable” and the code is 4 (“packet too big”). It is possible to specifically allow ICMP with a certain combination of type and code while denying everything else. In IOS, permitting the ICMP used in PMTUD in an ACL would looks like this:

!

access-list 100 permit icmp any any 3 4

!

The first number is the type and the second one is the code. In the running configuration it’s changed to simply, packet-too-big:

!

access-list 100 permit icmp any any packet-too-big

!

However, the device that messes up your PMTUD might not even be under your administrative control, making it unfeasible to make this change to the ICMP filter. Because the DF-bit is set in the IP header by hosts who use PMTUD, you could end up in a situation where you simply can’t reach a host because the packet is dropped without the error message getting back to the sender telling it to lower MSS (in reality, the TCP stack can figure out that packets are dropped because they are too big and will eventually try smaller packets).

There are a few workarounds to this with the first one being to simply remove the DF-bit from the packets on a router that we have control over. In doing so, we’re allowing the router with the lower MTU link further down the path to fragment the packet instead of dropping it. This is generally not recommended. The more common solution is to use the “MSS clamping” feature. What it does is to change the MSS field in any inbound or outbound TCP SYNs that traverse the interface. The hosts will then use this lowered MSS rather than what they would normally use (local link -40 bytes), resulting in packets that are small enough to pass. It’s important to remember that you shouldn’t use mss clamping on a provider/enterprise edge device because it would open it up to a (D)DoS attack where an attacker sends a large number of SYNs that the router must process and adjust the MSS of, potentially melting the control plane. Ensure that you do not put yourself in a situation where that is possible. The feature is activated at the interface level with this command:

!

interface gig0/0

ip tcp mss-adjust x

!

It is supposed to apply to both IPv4 and IPv6 traffic as of some relatively recent IOS version.

6. Tunneling

Some kind of tunneling is usually involved whenever we have an MTU issue due to the extra overhead it adds. GRE for example adds an extra 24 bytes of header, effectively reducing the size of the data portion of the packet by that amount if you want to avoid fragmentation. Fragmentation and tunneling becomes somewhat complicated because a router that has a tunnel endpoint has two separate roles. The first role is as a packet forwarder that looks at an incoming packet and determines that the tunnel interface is the outgoing interface. It checks the MTU of the tunnel and compares it to the size of the packet and if the packet is equal to or smaller than the MTU, the packet is sent normally. If it’s bigger and the DF-bit is set, the packet is dropped and the router sends the ICMP error message. If it’s bigger and the DF-bit isn’t set, the router does fragmentation. If fragmentation has to happen before the packet can be sent out the tunnel, you could either fragment first and then add the encapsulation, or add the encapsulation and then fragment. In the case of a GRE tunnel fragmentation happens first, and once that’s done the encapsulation is added.

Now it gets a bit tricky. When the router with the tunnel source sends the packet, that router conceptually becomes a host because the source address in the outer IP header is the router’s tunnel endpoint. What can happen then is that the tunnelled packet runs into MTU issues along the path between the tunnel source and the tunnel destination. If fragmentation happens to the tunnelled packet, the tunnel destination has to reassemble it before decapsulation. Once that’s done, the router can forward it to the actual destination. Like we mentioned before, it’s not catastrophic if an actual host has to reassemble packets because they typically have the processing power available to do that without too much trouble. For a router however, having to reassemble packets arriving on a tunnel interface is bad news and it degrades performance, especially if IPsec is involved as well.

When a host uses PMTUD the df-bit is set in the IP header, but by default this is not copied to the GRE header. In other words, if a packet arrives with the df-bit set, the new outer header will not have the df-bit set. The df-bit will determine if the packet is allowed to enter the tunnel itself, but once it’s encapsulated, it can be fragmented by some router in the middle that just sees an IP packet going between the two tunnel endpoints. This behaviour can be changed by enabling tunnel path mtu discovery under the tunnel interface. If this is enabled, the df-bit is copied from the original IP header to the outer IP header used to tunnel the packet. This should, if path MTU discovery is working, result in routers along the path sending ICMP messages back to the tunnel source instead of fragmenting the packet. The problem with relying on tunnel PMTUD and PMTUD in general is that it adds latency because it takes several dropped packets before we arrive at the correct packet size. This example should give you an idea why it’s not a good idea to rely on PMTUD for any kind of time sensitive application:

- The host on the left sends a 1500 byte packet to R1 with the df-bit set.

- R1’s outgoing interface for that packet is the tunnel which has a default MTU of 1476 because the physical interface is 1500 and GRE adds 24 bytes of overhead. Because the df-bit is set by the host, R1 has no choice but to drop the packet.

- The host receives the ICMP error message from R1 and adjusts the packet size to 1476 and sends a packet of that size to R1.

- R1 receives the 1476 sized packet from the host and sends it out the tunnel and copies the df-bit to the outer header because tunnel path mtu discovery is enabled.

- R2 receives the GRE encapsulated packet and drops it because the MTU is 1400 on its link to R3. The df-bit is set so R1 is informed of this and lowers the MTU of the tunnel.

- The host sends another packet sized 1476 but this time R1 drops it because tunnel MTU has been lowered. R1 informs the host of this via ICMP.

- The host sends a fourth packet, this time it’s small enough to reach the host on the other side.

This kind of prolonged negotiation of packet size would work for transfers that aren’t time sensitive, but if they are, it’s not that great obviously.

7. Adding IPsec

If we add IPsec to our GRE tunnel, we essentially have the same problems, except that IPsec adds additional overhead on top of GRE. Just like with GRE only we can have fragmentation before the packets enter the tunnel, and fragmentation after encapsulation when the packet is travelling between the tunnel endpoints. If we use IPsec, the second type, where the fragmentation happens between the tunnel endpoints, is the most damaging. The reason is that the tunnel destination must reassemble these fragments using process switching before sending the packet to the hardware crypto engine. The degradation in performance can be very significant. Another scenario is ‘double fragmentation” where a packet arrives at the GRE tunnel and gets fragmented. The fragments are encapsulated with GRE headers and sent to the IPsec process. IPsec adds additional encapsulation resulting in packets that are now too big to leave the physical interface. The GRE+IPsec packets must be fragmented again.

8. Solutions

The recommended solution to tunnel MTU issues is to deploy a combination of features to protect yourself against several different scenarios.

The first thing you need to do is to adjust the MTU of the tunnel to around 1400 bytes. The default GRE MTU is 1476 and this does not take the IPsec overhead into account. Note here that if you use the Virtual Tunnel Interface (VTI) feature that I covered in a previous post, the MTU is automatically set to 1436, removing the need for manual MTU adjustment. If you make the adjustment to 1400, you ensure that incoming packets aren’t fragmented after IPsec because packet size will never exceed the physical interface’s 1500 byte MTU. What can still happen however is that incoming packets will be larger than what can be accommodated by a 1400 byte MTU. In that case, the packets will either be dropped (if df-bit is set) or fragmented before GRE and IPsec. Still, that is better than the alternative.

In addition to the tunnel MTU adjustment, you should also deploy MSS clamping with the ip tcp adjust-mss command. This should prevent the situation where a host sends a packet that is too big before PMTUD has kicked in, or when PMTUD isn’t working due to some ICMP filter. If tunnel MTU is set to 1400, you should set adjust-mss to 1360 to take the 40 byte IP and TCP overhead into account.

You could also use tunnel path mtu discovery. This could protect your packets from fragmentation if they are are too big for the underlying links between your tunnel endpoints. On the other hand, if PMTUD isn’t working, your packets would be unceremoniously dropped instead. However, having the packets dropped might be better than taking the performance hit from reassembly since you get immediate feedback of the fact that there’s a problem. If packets are black holed you could adjust tunnel MTU and MSS downwards until they are not.

9. Sources

Internetworking with TCP/IP 6th Edition by Douglas Comer

Resolve IP Fragmentation, MTU, MSS, and PMTUD Issues with GRE and IPSEC, Document ID: 25885

http://blog.ipspace.net/2013/01/tcp-mss-clamping-what-is-it-and-why-do.html